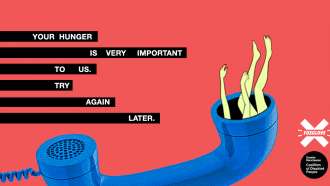

We forced the DWP to explain its benefits fraud algorithm: here’s what we found

In 2021, Foxglove began an investigation with the Greater Manchester Coalition of Disabled People into the Department for Work and Pensions’ (DWP) secret benefit fraud investigations algorithm.

Our original goal was to bring a legal challenge against the DWP’s algorithm, which appeared to unfairly target people claiming disability benefits as fraudsters. But first we needed to know more about it.

After three years, here’s what we’ve learned:

- The algorithm works calculates and assigns a “risk score” to each person claiming a benefit.

- Once these scores are generated, each “case” is referred to human case agents for further review.

- Every case is reviewed by a human caseworker and we have not been able to prove the algorithm makes any decisions about the claim status.

- The case workers do not see the risk score and, in the DWP’s words: “the risk score is not used to inform or influence any other fraud and error activity or potential investigation.”

- Caseworkers receive a randomised mix of high and low risk score cases.

- It appears that it is the human decision maker who determines the claim status.

- As a result, the risk score and algorithm doesn’t appear to control investigations and/or claim status.

In summary, the algorithm does not influence the outcome of the caseworker’s review. In other words, to the best of our knowledge, the DWP algorithm is not making decisions that result in disabled people being unfairly targeted for benefit fraud investigations.

While we are glad we’ve been able to establish these facts, the DWP should have published all of this information prior to the introduction of the algorithm for us all to consider and scrutinise.

We know algorithms often contain bias and disproportionately effect vulnerable groups. Without full and proper transparency and independent scrutiny, these biases remain hidden, unchallenged and deeply harmful to some of the most vulnerable individuals and communities in the UK.

Indeed, we suspect the DWP is using other algorithms that are unfairly hitting disabled people. Last year, the head of Universal Credit admitted their systems contain bias because “you have to have bias to catch fraudsters”.

The government has announced heavy cuts to the essential funds many disabled people need to live on as well as a huge increase of AI in public services. The safeguards laid out are vague and lack detail.

So, while this particular case might be over, our fight to ensure changes involving AI and machine learning made to public service provision are transparent, safe and fair, will continue.

For supporters who donated to the GMCDP’s crowdfunder, now that this case has come to an end, you can request a refund. Or if you prefer, the GMCDP can use your donation for their ongoing vital work supporting their members and disabled people and across the UK.

At Foxglove, we plan to proactively monitor the government’s use of algorithms and machine-learning tools to figure out which of these systems we are best placed to challenge, while maintaining a close relationship with the GMCDP, to support them however we can in future.

For all the updates on this work as they come, hit the button below: